In backend development, Inter-Service Communication (ISC) refers to the exchange of data or messages between different services or components that collectively form a larger application or system. ISC enables these services to collaborate and work together to achieve a common goal.

In modern software architectures, applications are often built as a collection of loosely coupled services, each responsible for specific functionality.

These services can be deployed independently, scale individually, and communicate with each other through ISC mechanisms.

ISC in backend development plays a crucial role in building distributed systems and microservices architectures.

Some common ISC patterns and techniques used in backend development include :

HTTP/REST APIs: Services can communicate with each other over the HTTP protocol, using Representational State Transfer (REST) APIs. This involves making HTTP requests to specific endpoints exposed by the target service and receiving responses in a structured format, typically JSON or XML.

Messaging Queues: Services can exchange messages asynchronously through a messaging queue or broker. Services can publish messages to a queue, and other services can subscribe to that queue to receive and process the messages. This pattern allows for decoupling and scalability, as services can process messages at their own pace.

Remote Procedure Calls (RPC): RPC enables services to invoke methods or functions on remote services as if they were local. This allows for more direct and synchronous communication between services. Popular frameworks like gRPC and Apache Thrift provide mechanisms for implementing RPC-based ISC.

Message Brokers and Event Sourcing: Services can leverage message brokers like Apache Kafka or RabbitMQ to facilitate ISC through event-driven architectures. Events are produced and consumed by services, enabling them to react to changes and trigger actions based on those events. Event sourcing is a related pattern that stores the complete history of events and allows services to replay or analyze them.

Service Mesh: A service mesh is a dedicated infrastructure layer that handles communication between services. It provides capabilities like service discovery, load balancing, circuit breaking, and observability. Tools like Istio and Linkerd are commonly used to implement service meshes.

What is Lambda Invoke and API Gateway invoke from request/Axios or any HTTP request library for ISC (Inter Service Communication)?

Lambda invoke refers to the act of triggering the execution of an AWS Lambda function. AWS Lambda is a serverless computing service provided by Amazon Web Services (AWS) that allows you to run code without provisioning or managing servers. Generally is the ideal way to achieve ISC.

async thisIsAnExampleLambdaInvokeFunction(req) {

const response = JSON.parse(

(

await lambda

.invoke({

FunctionName: process.env.NAME\_OF\_YOUR\_LAMBDA\_FUNCTION,

Payload: JSON.stringify({

data: req,

}),

})

.promise()

).Payload as string

);

}

Inter Service Communication in case of Lambda Invoke

- When we refer to API Gateway being invoked by the Request or Axios library, it means making HTTP requests to an API Gateway endpoint using these libraries as a means of communication. In the context of API Gateway, you can configure it to act as a front-end for your APIs, providing a centralized entry point for clients to interact with your backend services.

async thisIsAnExampleAPIGateWayRequest(req:any): Promise<any\> {

const response= (

await Axios.post(

this.serverUrl + Config.YOUR\_RESOURCE\_PATH.path,

{

data: req,

},

{

headers: {

Authorization: config.get('AUTH\_TOKEN'), // If required

},

}

)

).data;

return response;

}

Inservice Communication in case of Request / Axios via API Gateway

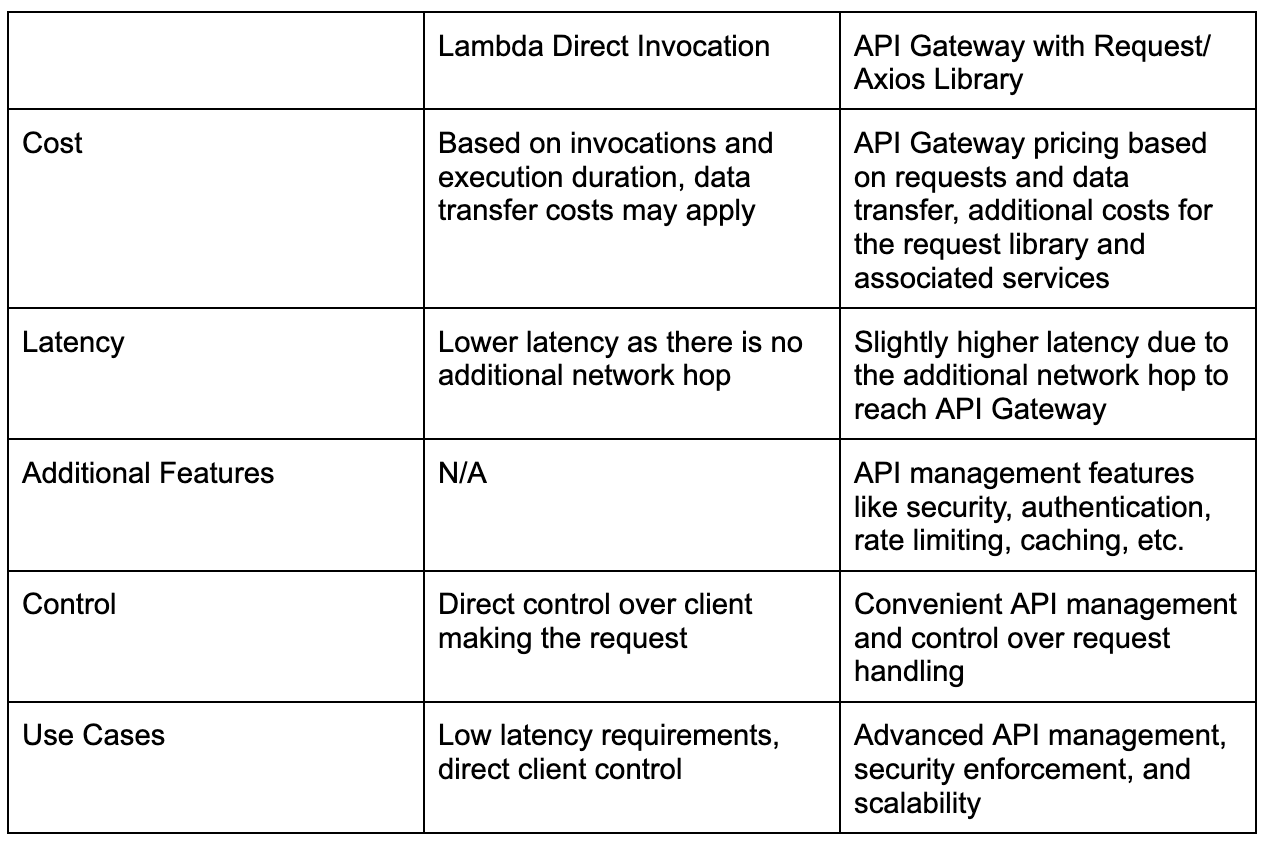

Indeed, it is evident that API Gateway introduces additional overhead in the case of Inter-Service Communication (ISC). While API Gateway provides valuable features for API management, security, and scalability, it does add an extra network hop and associated latency compared to the direct invocation of Lambda functions. This overhead can impact the overall performance and latency of inter-service communication.

A summarized comparison table between Lambda direct invocation and API Gateway with a request library for cost and latency:

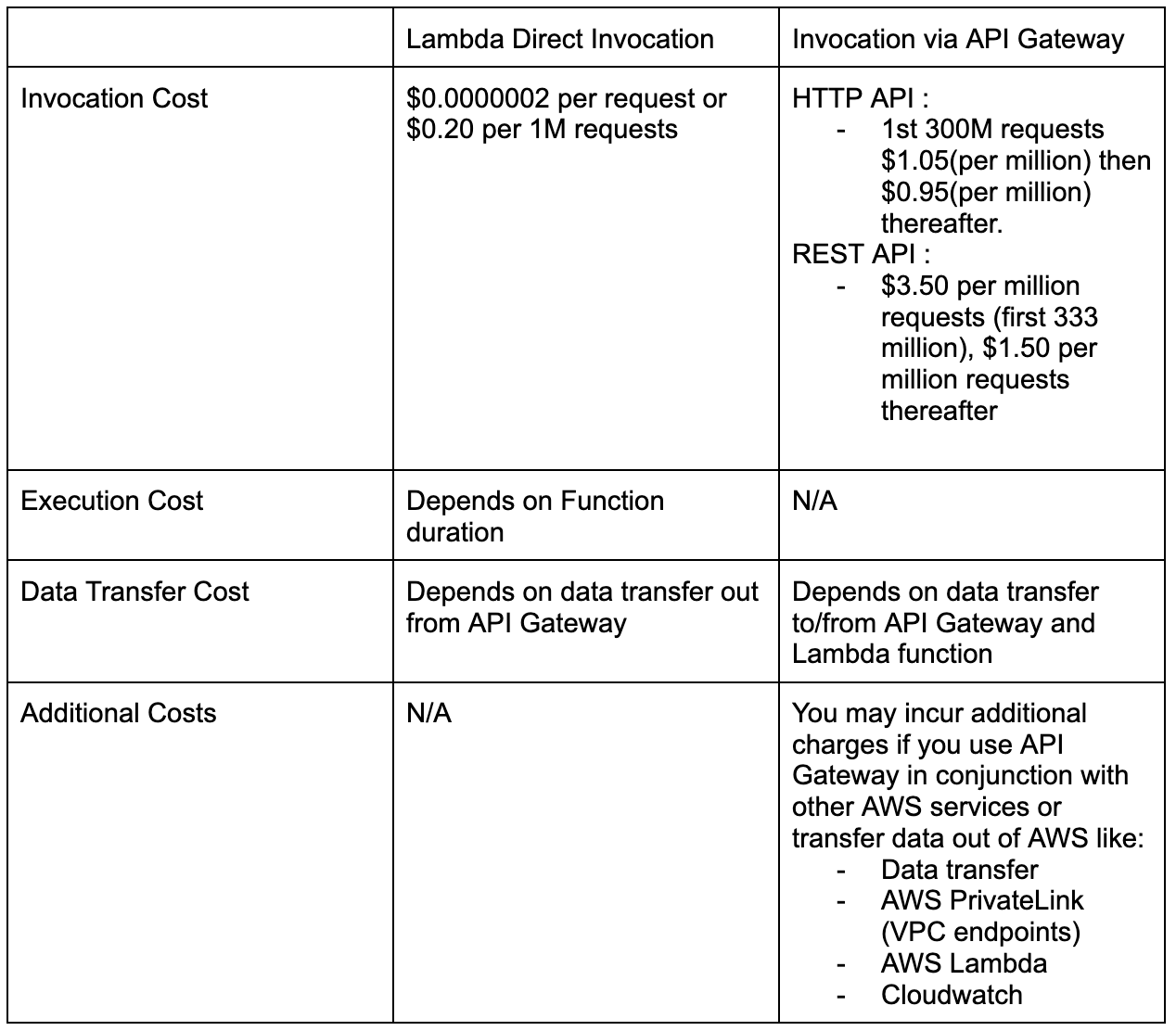

Here is a cost comparison table between Lambda Invoke and Invocation from API Gateway

Let’s do a quick cost estimation:

Assumptions:

Region: Asia Pacific (Mumbai)

Request per month: 50M

Memory allocation: 128MB per execution

Avg Estimated duration: 300ms

Data Transfer : 3 kilobytes (KB)

- Lambda invoke :

Request Cost : 50 * 0.20 ~= $10 / month

- Execution Cost: $31.26 / month

- Total Amazon Lambda charges = $10.00 + $31.26 = $41.26

2. API Gateway Invoke :

- Amazon API Gateway API call charges = 50 million * $3.50/million = $175.0

- Total size of data transfer : 3KB * 50 = 150 million/KB = 143GB

- Amazon API Gateway data transfer charges = 143 GB * $0.09 = $12.9

- Total Amazon API Gateway charges = $175 + $12.9 = $187.9 / month

In conclusion, the choice between Lambda direct invocation and API Gateway with a request library depends on specific considerations related to cost, latency, control, and additional features:

Lambda Direct Invocation is preferable when low latency is a primary concern and when you have direct control over the client making the request. It offers faster response times since there is no additional network hop to reach API Gateway.

API Gateway with a Request Library is advantageous when advanced API management features such as security enforcement, authentication, rate limiting, caching, or request validation are required. API Gateway provides a convenient solution for managing and securing APIs, although it introduces a slightly higher latency due to the additional network hop.