What is Nginx?

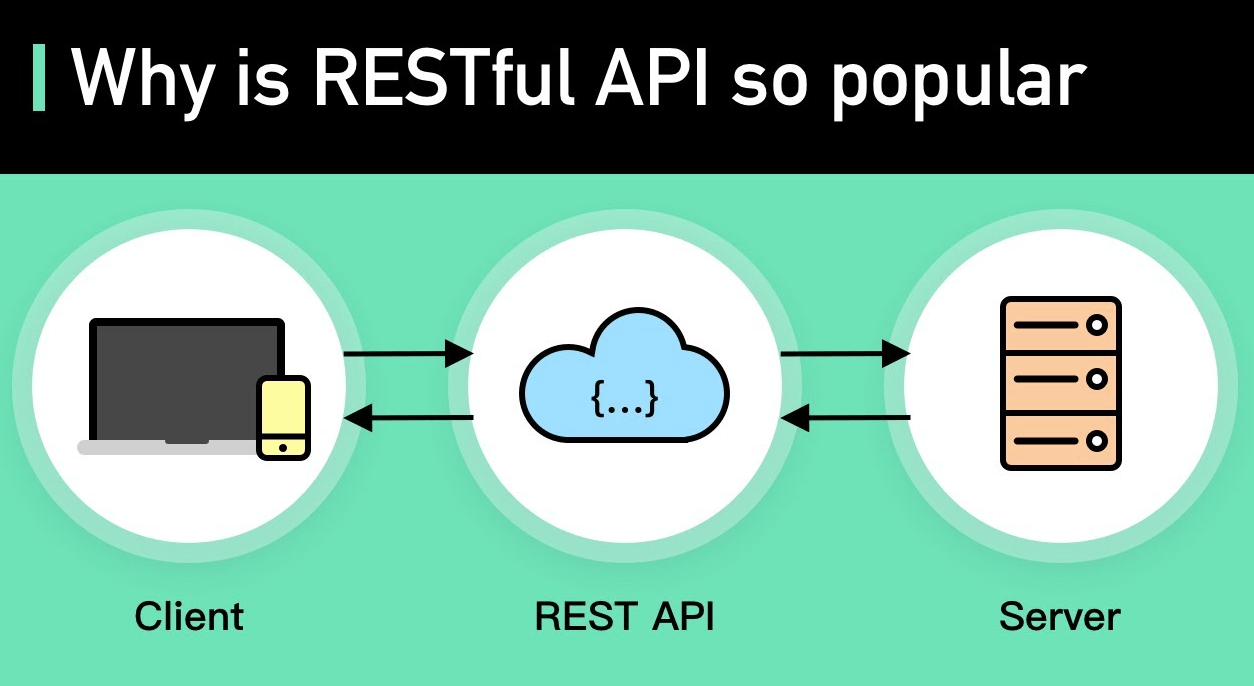

In simple words, Nginx is software that works as a reverse proxy and can be used as a Load balancer to distribute requests to different servers. Nginx helps you to build a web server.

Here, we would use Nginx as the central traffic controller, handling all incoming requests and making sure they reach the relevant microservice to process the requested actions or data. This approach allows for better management, security, and scalability in a microservices-based architecture.

Step 1: Set Up Microservices

Start by setting up the three microservices (user-service, product-service, and order-service) on separate ports, each serving its specific functionality.

Step 2: Run Nginx

If you already have Nginx installed and operational on your system, you can proceed directly to the next step!

Steps to run Nginx in Docker

Running Nginx in Docker is a straightforward process. Docker allows you to containerize Nginx, making it easy to manage and deploy. Below are the steps to run Nginx in Docker:

1: Install Docker

Ensure that you have Docker installed on your system. Visit the official Docker website (https://www.docker.com/) to download and install Docker for your operating system.

2: Pull the Nginx Docker Image

Open a terminal or command prompt and run the following command to pull the official Nginx Docker image from Docker Hub:

docker pull nginx3: Run Nginx Container

Now that you have the Nginx image, you can create and run a Docker container using the following command:

docker run -d -p 80:80 --name my_nginx nginxdocker run: Command to create and start a new container.-d: Detached mode, which runs the container in the background.-p 80:80: Maps port 80 of the host to port 80 of the container, allowing you to access Nginx on the host machine at[http://localhost](http://localhost.).--name my_nginx: Assigns the namemy_nginxto the running container.nginx: The name of the Docker image you want to use for the container.

Nginx is now running in the Docker container. You can access the Nginx web server by opening your web browser and navigating to http://localhost. If you see the default Nginx welcome page, it means Nginx is running successfully in the Docker container.

you can also stop the container by using following command

docker stop my_nginxStep 3: Configure Nginx as API Gateway

Create an Nginx configuration file that will act as the API gateway and route requests to the appropriate microservices based on the request URL.

File: /path/to/local/nginx.conf

# Define upstream blocks for each microservice

upstream user_service {

server user-service-container-ip:8001; # Replace with the IP address of the user-service container and its actual port

}

upstream product_service {

server product-service-container-ip:8002; # Replace with the IP address of the product-service container and its actual port

}

upstream order_service {

server order-service-container-ip:8003; # Replace with the IP address of the order-service container and its actual port

}

# Main server block to handle incoming requests

server {

listen 80;

server_name api.example.com; # Replace with your actual domain or IP address

location /users {

# Route requests to the user-service

proxy_pass http://user_service;

proxy_set_header Host $host;

}

location /products {

# Route requests to the product-service

proxy_pass http://product_service;

proxy_set_header Host $host;

}

location /orders {

# Route requests to the order-service

proxy_pass http://order_service;

proxy_set_header Host $host;

}

# Add any other API routes and their corresponding services here

}

In the above configuration, the proxy_pass line is necessary for Nginx to function as a reverse proxy and correctly forward requests to the backend server, the proxy_set_header Host $host; line is not always mandatory, but it is highly recommended. Some backend services might rely on the Host header to handle virtual hosts or domain-based routing, so including this line ensures that the backend server receives the correct information and can respond appropriately.

In some specific use cases, you might omit the proxy_set_header line if the backend service doesn’t require or use the Host header. However, it’s generally a good practice to include it to maintain consistency and to ensure that the backend service can handle various scenarios properly.

Step 4: Attaching the Configuration File to the Nginx Container

Run the Nginx container again, this time mounting the local Nginx configuration file from the host machine to the Nginx container:

docker run -d -p 80:80 —name my_nginx -v /path/to/local/nginx.conf:/etc/nginx/nginx.conf:ro nginx

Replace /path/to/local/nginx.conf with the path to the local Nginx configuration file you created earlier.

Step 4: Test the API Gateway

The API gateway has been successfully configured within the Nginx container, and it is now ready to route incoming requests to their respective microservices. To verify the functionality of the API gateway, we can conduct testing by sending requests to the designated endpoints:

- Requests sent to http://api.example.com/users will be intelligently directed to the user-service, which is running on the specified port for handling user-related operations.

- Requests sent to http://api.example.com/products will be routed to the product-service running on its designated port, responsible for managing product-related functionalities.

- Similarly, requests directed to http://api.example.com/orders will be routed to the order-service, operating on its specified port to process order-related tasks.

To conduct the testing, ensure that each microservice is up and running on their respective ports as specified in the configuration. The microservices should be appropriately designed to handle the requests that correspond to their specific functionalities.

By leveraging Docker to run Nginx as the API gateway, this setup offers numerous advantages. It enables efficient management and scaling of the microservices architecture, ensuring each service remains isolated and self-contained within its container. The containerization approach streamlines the deployment process, enhances portability, and facilitates system maintenance, contributing to a more flexible and reliable application infrastructure. Moreover, using Nginx as the API gateway allows for centralized traffic management, load balancing, and caching capabilities, all of which contribute to improved performance, security, and overall user experience.

Summary

In this article, we explored how Nginx can be harnessed as an API gateway to manage traffic and enhance the security of microservices-based architectures. We set up three services, demonstrated running Nginx in Docker, and created a custom configuration file to define routing rules. Leveraging Nginx as an API gateway streamlines interactions between clients and backend services, enabling developers to build scalable and efficient microservices applications with ease.